Cerebras Systems introduced its Wafer Scale Engine 2 (WSE-2) processor, which touts a record-breaking 2.6 trillion transistors and 850,000 AI-optimized cores, what the company describes as “the largest chip ever built.” Established by SeaMicro founder Andrew Feldman, Cerebras makes a massive chip out of a single wafer, unlike the typical process of slicing it into hundreds of separate chips. This is the company’s second chip that is built out of an entire wafer, wherein the pieces of the chip, dubbed cores, interconnect to enable the transistors to work together as one.

VentureBeat reports that Cerebras’ CS-1, its previous chip launched in 2019, features 400,000 cores and 1.2 billion transistors on a single wafer chip and was built with a 16-nanometer manufacturing process. The latest chip is built with a 7-nanometer process, “meaning the width between circuits is seven billionths of a meter … [which] can cram a lot more transistors in the same 12-inch wafer.”

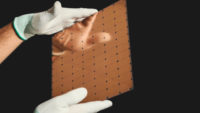

According to Feldman, the circular wafer is cut into an 8-inch-by-8-inch square. “We have 123 times more cores and 1,000 times more memory on chip and 12,000 times more memory bandwidth and 45,000 times more fabric bandwidth,” he said. “We were aggressive on scaling geometry, and we made a set of microarchitecture improvements.”

“This is a great achievement, especially when considering that the world’s third largest chip is 2.55 trillion transistors smaller than the WSE-2,” said The Linley Group principal analyst Linley Gwennap. VB points out that, “the largest graphics processing unit (GPU) has only 54 billion transistors — 2.55 trillion fewer transistors than the WSE-2.”

Feldman stated that, “when you have to build a cluster of GPUs, you have to spread your model across multiple nodes … You have to deal with device memory sizes and memory bandwidth constraints and communication and synchronization overheads.”

The WSE-2 “will power the Cerebras CS-2, the industry’s fastest AI computer … manufactured by contract manufacturer TSMC.” “Cerebras does deliver the cores promised,” said Moor Insights & Strategy analyst Patrick Moorhead, adding that, “it does appear to give Nvidia a run for its money but doesn’t run raw CUDA,” which has become a de facto standard. “Nvidia solutions are more flexible as well as they can fit into nearly any server chassis,” he said.

The CS-2, at 26 inches tall, will replace “clusters of hundreds or thousands of graphics processing units (GPUs) that consume dozens of racks, use hundreds of kilowatts of power, and take months to configure and program.”

Tirias Research principal analyst Jim McGregor noted that, “obviously, there are companies and entities interested in Cerebras’ wafer-scale solution for large data sets.” “But, there are many more opportunities at the enterprise level for the millions of other AI applications and still opportunities beyond what Cerebras could handle, which is why Nvidia has the SuprPod and Selene supercomputers,” he pointed out. “Cerebras is more of a niche platform.”

The Cerebras WSE and CS-1 have been deployed at Argonne National Laboratory, Lawrence Livermore National Laboratory, Pittsburgh Supercomputing Center and other leading facilities.

No Comments Yet

You can be the first to comment!

Sorry, comments for this entry are closed at this time.