The Machine: HPE Prototype Intros New Computing Paradigm

November 30, 2016

At Discover 2016 in London this week, Hewlett Packard Enterprise revealed its early version of a working prototype for The Machine, which began as a research project in 2014. The prototype, in a Fort Collins, Colorado lab, tests the design that will soon be available to programmers to create software to exploit its capabilities. The Machine relies on memory technology to increase calculating speed, and will require a new kind of memory chip unlikely to be widely available before 2018 at the earliest.

The Wall Street Journal reports that, given these contingencies, HP Enterprise has not set a date for The Machine’s commercial availability, and that “its near-term plan is to use components developed for the system in its conventional server systems … by 2018 or 2019.”

Already in production is the X1 chip (and related components), which “could make it as fast to transmit data from one machine to another as within a single system” as well as allow “computer designers to stack components vertically to save space.” HP Enterprise hardware products exec Antonio Neri has urged staff to “bring those technologies into our current set of products and road maps faster.”

According to those involved with The Machine, “a complete version … could bring more than hundredfold speedups to chores such as helping airlines respond to service disruptions, preventing credit-card fraud and assessing the risk of vast financial portfolios.” Spotting abnormal activity in “vast numbers of computing transactions” that could signal a cyber attack is another target application.

Whereas today’s systems are capable of analyzing “50,000 events a second and compare them with five minutes of prior events,” The Machine could compare 10 million events a second with 14 days of prior events.

HP Enterprise chief architect/vice president Kirk Bresniker notes that conventional computers break up big calculating jobs to be handled by individual microprocessors, retrieving “data from sets of memory chips or devices like disk drives,” which, often “have to wait as data are copied and transferred back and forth among the various components.”

The Machine, says Bresniker, “is designed to make those data transfers unnecessary” because “hundreds to thousands of processors simultaneously can tap into data stored in a vast pool of memory contained in one server or multiple boxes in a data center.”

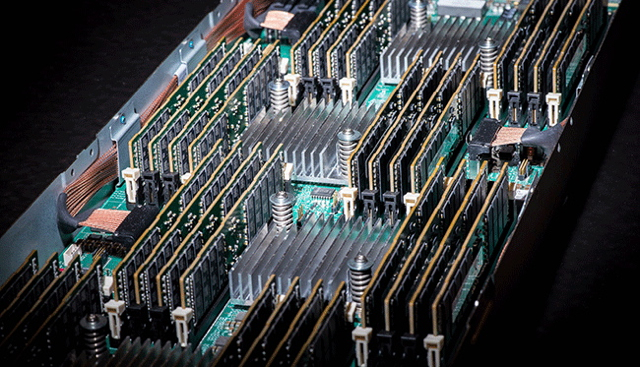

The Machine prototype consists of two modules with 8 terabytes of memory, “roughly 30 times the amount found in many conventional servers — with hundreds of terabytes expected in the future as more chips and modules are linked together.”

The move towards different kinds of computing is motivated by Silicon Valley companies concerned about the “growing flood of data from connected devices,” as well as the fact that improvements in microprocessor chips are not as robust as they once were. “We are in a stage where stuff we were doing before has to change,” said Sandia National Laboratories’ Erik DeBenedictis. “That hasn’t happened for a very long time.”

No Comments Yet

You can be the first to comment!

Sorry, comments for this entry are closed at this time.