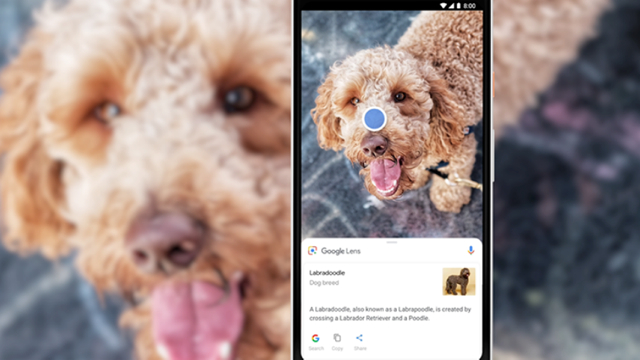

During this week’s Google I/O conference, the importance of Google Lens to chief executive Sundar Pichai’s AI-first strategy became apparent. Google Lens combines computer vision and natural language processing with Google Search, for a solution aimed at consumers. Lens, described as “Google’s engine for seeing, understanding, and augmenting the real world,” resides in the camera viewfinder of Assistant and, soon, its top-end Android smartphones. Lens recognizes people, animals, objects, environments and text.

The Verge reports that, “anything a human can recognize is fair game for Lens,” which then uses Search’s knowledge base “to surface actionable info like purchase links for products and Wikipedia descriptions of famous landmarks.” “The goal,” it says, “is to give users context about their environments and any and all objects within those environments.”

First announced at I/O 2017, Lens works in Google Assistant, and Google Photos app, and is now part of “the Android camera on Google Pixel devices, as well as flagship phones from LG, Motorola, Xiaomi, and others.” Lens has also improved, now working in real time to “parse text as it appears in the real world” and recognizing “the style of clothing and furniture to power a recommendation engine the company calls Style Match.”

It’s also being used to “power new features in adjacent products like Google Maps.” Matched with Google Translate, Lens can also translate “dinner menu items or even big chunks of text in a book” in real time.

“This is where Google Lens really shines by merging the company’s strengths across a number of products simultaneously,” suggests The Verge. “Google Lens is much more powerful one year into its existence, and Google is making sure it can live on as many devices, including Apple-made ones, and within as many layers of those devices as possible.”

Wired reports that Google Lens’ new features will debut at the end of May. Google vice president of product for AR, VR and vision-based products Aparna Chennapragada described its integration with smartphone cameras “like a visual browser for the world around you.”

“By now people have the muscle memory for taking pictures of all sorts of things — not just sunsets and selfies but the parking lot where you parked, business cards, books to read,” he said. “That’s a massive behavior shift.” Google Lens will be built directly into the camera on 10 Android phones, including “handsets from Asus, Motorola, Xiaomi, and OnePlus.” A physical button on the new G7 ThinQ, when pressed twice, will automatically open Lens.

With Google Lens, says Wired, the company is doubling down on its core competency of search, except now the user can just point her smartphone camera at something to learn more. In fact, with Lens, the camera will start scanning the environment as soon as its opened.

“We realized that you don’t always know exactly the thing you want to get the answer on,” said Google vice president of VR and AR Clay Bavor. “So instead of having Lens work where you have to take a photo to get an answer, we’re using Lens Real-Time, where you hold up your phone and Lens starts looking at the scene [then].”

For more information on Google Lens, visit the Google Blog.

No Comments Yet

You can be the first to comment!

Sorry, comments for this entry are closed at this time.